The team ViBS carries out both experimental and methodological research in the domains of EEG, ECG, oculometry and video.

The work of the team is characterized by a multidimensional and multimodal approach.

ViBS manages the experimental room PerSee.

Publications

Code Repository (coming soon)

Research Projects :

Chiffres-clés

6.5

permanents

10

doctorants & post-doctorants

Axes de recherche

Les thématiques de recherche de ViBS se regroupent en 9 axes principaux :

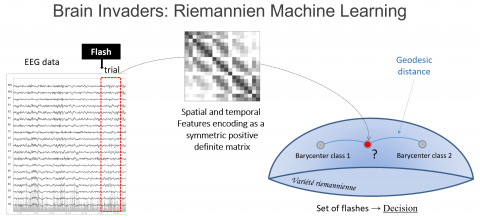

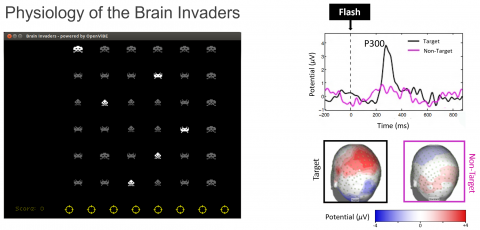

- l'interface cerveau-machine Brain Invaders, développée sous forme d'un jeu vidéo.

- le co-enregistrement Gaze-EEG (acquisition combinée de l'électroencéphalographie et de l'oculométrie) utilisé pour l'analyse multimodale des fonctions cognitives, notamment lors de tâches cognitives nécessitant une grande précision dans la synchronisation des timings.

- la perception visuelle multi-stable, en développant des méthodes, des modèles et des expériences aidant la compréhension des dynamiques de la perception visuelle par la stimulation avec des stimuli visuels ambigus.

- le développement d'outils pour l’analyse et les avancés théoriques dans le domaine de la géométrie Riemannienne appliquée au traitement des données.

- le transfert d'apprentissage pour les interfaces cerveau-machine, en concevant des méthodes d'apprentissage par transfert basées sur l'EEG et s'appuyant sur les avancées récentes en géométrie riemannienne.

- la perception intra-saccadique, avec l'étude de l'activation des voies visuelles durant une saccade oculaire.

- la surveillance fœtale non-invasive multimodale, avec le suivi multimodal (par ECG, PCG, CTG) du fœtus à différents stades de la grossesse et de l'accouchement

- la conception expérimentale, en optimisant le nombre et la localisation des capteurs pour l'extraction d'information noyée dans du bruit.

- la factorisation d'observations multimodales, appliquée à l'ECG, le PCG et l'EMG

Mots-clés

Géométrie Riemannienne, interface cerveau-machine, acouphènes, EEG, décodage, analyse des données, rapport signal-bruit, transfert d’apprentissage, classification de la P300, diagonalisation conjointe approchée, ICA, EMG, décomposition tensorielle, mouvements oculaires, filtre de Kalman, électrocardiographie, emplacement des capteurs, optimisation alternées, pénalisation, oculométrie, perception multistable, monitoring foetal

ViBS en images

Projets

Hi-Fi

2020-2024

Financement : ANR-20-CE17-0023

Coordinateur : J. MATTOUT

EMOOL

2023-2026

Financement : ANR ASTRID

Coordinateur : Aurélie Campagne (MCF UGA, LPNC Grenoble)

Gaze-predict

2023

Financement : IRGA UGA

Coordinateur : Nathalie GUYADER et C. PEYRIN (DR CNRS, LPNC)

MAFMONITO

2024

Financement : IRGA UGA

Coordinateur : Bertrand RIVET et J. FONTECAVE (TIMC)

SurFAO

2017-2024

Financement : ANR-17-CE19-0012

Coordinateur : Bertrand RIVET et J. FONTECAVE (TIMC)

Vision-3E

2022-2026

Financement : ANR-21-CE37-0018

Coordinateur : Anna MONTAGNINI (Institut de Neurosciences de la Timone)

APHANTASIA

Financement :

Coordinateur : Gaën PLANCHER

Partenaires

Partenaires académiques

- INSERM, Lyon Neuroscience Center, France

- Department of Surgical Sciences, Universoty of Otago, Dunedin, New Zealand

- LPNC, Grenoble, France

- EMC, Lyon, Grenoble

- TIMC, Grenoble

- Sharif University of Technology, Iran

- University of Campinas, Brazil

- Lulea University, Sweden

Partenaires industriels

Faits marquants

2023

Christian JUTTEN, lauréat 2023 du prix Claude Shannon-Harry Nyquist Technical Achievement Award de IEEE Signal Processing Society

2022

Organisation du 4ème colloque international CORTICO BCI (Brain Computer Interface)

2021

Emmanuelle KRISTENSEN, en portrait dans la BD du CNRS : Les décodeuses du numérique

2020

- participe à la co-direction de deux ouvrages grand public publiés par CNRS Editions : Vers le cyber-monde - Humain et numérique en interaction et Le corps en images - Les nouvelles imageries pour la santé.

- nommé éditeur en chef de la revue IEEE Signal Processing Magazine

- nommé par le CNRS pour conseiller la conception/réalisation des ilots du futur Palais de la Découverte en sciences de l’information

2019

Christian JUTTEN, nommé chargé de mission auprès de la présidence du CNRS pour la Mission à l’Intégrité Scientifique (MIS)

En savoir plus sur les résultats des recherches de ViBS

-

Brain Invaders

Brain Invaders est une interface cerveau-machine (BCI) développée sous forme de jeu vidéo en open-source en s'appuyant sur la plateforme OpenViBE. Cette interface a été la première à intègrer un classificateur basé sur la géométrie Riemannienne et à proposer un mode de fonctionnement sans calibration. Elle est un démontrateur permanent du GIPSA-lab.ImageCoordinator :

Marco CongedoPublications scientifiques

Andreev et al, 2016; Cattan et al., 2021; Congedo et al, 2011; Korczowski et al., 2016 -

Gaze-EEG Co-Registration and Analysis

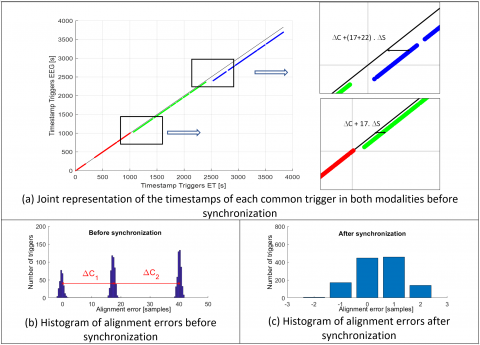

Gaze-EEG co-registration is the joint the electroencephalography (EEG) and eye-tracking (ET) acquisition. This co-registration technique is an effective way of delving into cognitive processes. It also allows for more ecological experimental protocols, which are not constrained by "external" markers, such as the onset of a word or image, and in which participants can perform fixations at their own pace. This type of joint acquisition therefore has a strong added value for the understanding of the time course of neural activity during cognitive tasks requiring a high degree of accuracy in the synchronization of timings. To do so, we have developed a synchronization method between EEG and gaze signals. Synchronization through common triggers is widely used in all existing methods, because it is very simple and effective. However, this solution has been found to fail in certain practical situations, namely for the spurious detection of triggers and/or when the timestamps of triggers sampled by each acquisition device are not jointly distributed linearly for the entire duration of an experiment. We propose two additional mechanisms, the "Longest Common Subsequence" algorithm and a piecewise linear regression, in order to overcome the limitations of the classical method of synchronizing common triggers. The resulted method is implemented in C++ as a DOS application which is very fast, robust and fully automatic. All is available in the Zenodo repository (https://doi.org/10.5281/zenodo.5554071) with examples on real data.

Let us illustrate the results on a dataset with two pauses during recording. After triggers matching, we observe the temporal shifts between their timestamp in each modality (figures (a), (b)). After synchronization, we observe (figure (c)) that the distribution of the alignment error between the two modalities is well realigned, and that its support is restricted to ±2 samples due to the computational rounding.

ImageAfter synchronization, EEG signals, triggered by eye events, are called Eye Fixation or Saccade Related Potentials (EFRP / ESRP). However, there are two major concerns with estimating visually-evoked potentials (EFRP or ESRP): (1) the overlap with the potential elicited at stimulus onset and the overlap with the visually-evoked potentials with each other and (2) the influence of eye movement on the EEG amplitude. For these estimations, numerous deconvolution methods had been developed. Among these, the most used are based on the Generalized Linear Model associated with spline regression to take into account confounding variables and/or to study the impact of a co-variable of interest on the amplitude of the EEG signal. See section “Intra-saccadic motion perception” for an application example.

Coordinator:

Anne Guérin-Dugué (PR, ViBS)

Involved researchers:

Emmanuelle Kristensen (IR, ViBS), B. Rivet (MCF, ViBS)

Publications scientifiques

Ionescu G., Frey A., Guyader N., Kristensen E., Andreev A., Guérin-Dugué A. (2021). Synchronization of acquisition devices in neuroimaging: An application using co-registration of eye movements and electroencephalography. Behavior Resarch Methods. 54(5):2545-2564. doi:10.3758/s13428-021-01756-6.

Guérin-Dugué, A., Roy, R.N., Kristensen, E., Rivet, B., Vercueil, L. & Tcherkassof, A. (2018). Temporal Dynamics of Natural Static Emotional Facial Expressions Decoding: A Study Using Event- and Eye Fixation-Related Potentials. special issue Dynamic Emotional Communication of Frontiers in Psychology, section Emotion Science, 9: 1190.

Frey, A., Lemaire, B., Vercueil, L., & Guérin-Dugué, A. (2018). An eye fixation-related potential study in two reading tasks: read to memorize and read to make a decision. Brain Topography, 31, 640–660. doi.org/10.1007/s10548-018-0629-8

Kristensen, E., Guérin-Dugué, A., & Rivet, B. (2017). Regularization and a general linear model for event-related potential estimation. Behavior Research Methods, 49(6), pp. 2255-2274. doi: 10.3758/s13428-017-0856-z

Kristensen, E., Rivet, B., & Guérin-Dugué, A. (2017). Estimation of overlapped Eye Fixation Related Potentials: The General Linear Model, a more flexible framework than the ADJAR algorithm. Journal of Eye Movement Research, 10 (1), pp.1-27.

Devillez, H., Guyader, N., & Guérin-Dugué, A. (2015). An eye fixation-related potentials analysis of the P300 potential for fixations onto a target object when exploring natural scenes. Journal of Vision, 15 (13), pp.1 -31.

Frey A., Ionescu G., Lemaire B., Lopez Orozco F., Baccino T., Guérin-Dugué A. (2013) .Decision-making in information seeking on texts: an Eye-Fixation-Related Potentials investigation, Frontiers in Systems Neuroscience, 7, pp. Article 39.

-

Intra-Saccadic Motion Perception

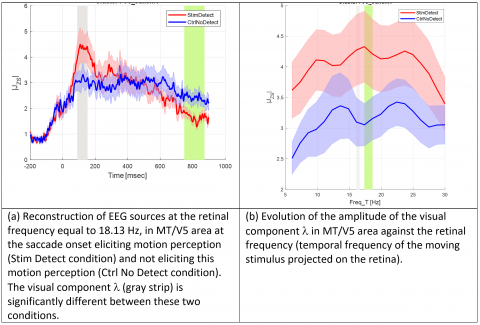

Retinal motion of the visual scene is not consciously perceived during ocular saccades in normal everyday conditions. using stimuli optimized to preferentially activate the M-pathway, Castet and Masson (2000) demonstrated that motion can be perceived during a saccade. Based on this psychophysical paradigm, we used electroencephalography and eye-tracking recordings to investigate the neural correlates related to the conscious perception of intra-saccadic motion. We demonstrated the effective involvement during saccades of the cortical areas V1-V2 and MT/V5, which convey motion information along the M-pathway. We also showed that individual motion perception was related to retinal temporal frequency.

This experiment was repeated a second time, using EEG with more electrodes and having the anatomical brain image of each participant to perform an EEG source reconstruction. The results confirm the activation, along the magnocellular pathway, of the visual areas V1, V2, MT/V5 in a differentiated way when the stimulus movement is perceived or not. Indeed, after deconvolution and spline regression applied on gaze-EEG signals (see section “Gaze-EEG co-registration and analysis”) during an intra-saccadic experiment (see “intra-saccadic experiment” section), the reconstruction of EEG sources on the MT/V5 area (figure(a) ) shows significant differences in case of motion perception and the evolution function of the temporal frequency (figure (b) of the moving stimulus projected on the retina, shows an optimal interval in line with the bandwidth of the motion detectors in MT/V5 area.

Coordinator :

Anne Guérin-Dugué (PR, ViBS)

Involved researchers:

E. Kristensen (IR, ViBS), Gaëlle Nicolas (Phd, ViBS)

In collaboration with:

Eric Castet (DR, LPC, AMU, Marseille), Michel Dojat (DR, GIN, UGA, Grenoble)

ImagePublications scientifiques

Nicolas G., Castet E., Rabier A., Kristensen E., Dojat M., Guérin-Dugué A. (2021) Neural correlates of intra-saccadic motion perception. Journal of Vision, 21(11):19. doi:10.1167/jov.21.11.19.

-

Multistable Visual Perception

Perceiving is essentially an active decision process, making sense of uncertain sensory signals. Indeed, the information about our environment is often noisy or ambiguous at the sensory level, and it must be processed and disambiguated to yield a coherent perception of the world in which we evolve. For instance, in vision we only have access to two-dimensional (2D) projections of the surrounding 3D environment, which leads to an intrinsic ambiguity for perception.

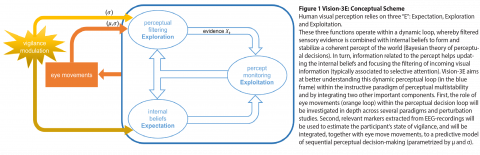

We aim at gathering behavioural and physiological evidence about the three key functions in the closed-loop processing of human visual perception— expectation, exploration, and exploitation, in order to better understand and

model their interaction.Image

The main interest is of this project is to find (electro-)physiological markers of evidence accumulation that could feed a drift-diffusion model underlying active vision. The resulting drift-diffusion process feeds a Markov decision process, and we use ambiguous visual stimuli in order to force alternating perceptual decisions within subjects. In order to support our hypothesese, we collect electro-encephaolgraphic (EEG), gaze, and behavioural data and interventional transcranial magnetic stimulation (TMS) at the IRMaGe platform using stimuli built with the psychopy software. Data is analysed with in-house algorithms, leveraging on the possibilites of the MNE software for EEG analysis.

Coordinator :

Ronald Phlypo

In collaboration with Alan Chauvin (LPNC, Equipe Vision & Emotion), Anne Guerin (GIPSA-lab, ViBS), Anna Montagnini (Neuroscience Institute - La Timone, Univ Aix-Marseille), and Steeve Zozor (GIPSA-lab, GAIA).

Other collaborators (past and present): Sayeh Gholipour-Picha, Najmeh Kheram, Juliette Lenouvel, Florian Millecamps, Ysamin Navarrete, Kevin Parisot.

Images on this project website extracted from the AAPG2021 grant ANR-PRC VISION-3E proposal.

This work has benefitted or benefits from funding of the national research agency [ANR](https://anr.fr) through AAPG2021 grant ANR-PRC VISION-3E & ANR-15-IDEX-02 "investissements d'avenir" through IDEX-IRS2020 grant ARChIVA, of the NeuroCog initiative, and of CNRS INS2I through the PEPS-INS2I-2016 grant MUSPIN-B.

-

Riemannian Geometry

This research project aims at developing new tools and making theoretical advances in the field of applied Riemannian geometry. For instance:

- visualization and manipulation of data in the Riemannian manifold of symmetric positive definite matrices (Congedo and Barachant, 2014)

- an algorithm for estimating the geometric mean of a set of positive-definite matrices using approximate joint diagonalization (Congedo et al., 2015).

- a fast algorithm for estimating power means of positive-definite matrices (Congedo et al., 2017).

- the solution to the Procrustes problem for positive-definite matrices (Bhatia and Congedo, 2019).

- finding approximate joint diagonalizers using Riemannian optimization (Alyani et al., 2017; Bouchard et al., 2018; Bouchard et al., 2020)

Coordinator :

Marco Congedo -

Transfer Learning in Brain-Computer Interface

We have conceived several Transfer Learning methods for EEG-based Brain-Computer Interfaces.

All these methods have been possible thanks to recent advances in Riemannian Geometry.

They are of two kinds

- unsupervised (Barachant, 2013; Zanini et al, 2017), or

- semi-supervised (Bleuzé et al., 2021; Rodrigues et al, 2019; Rodrigues et al, 2021).

We are currently working on a method for group transfer learning.

Coordinator :

Marco Congedo